Hey guys!

In this post, I'm going to share with you a very practical script to identify tables and indexes without data compression and apply page-type data compression to the returned tables.

Using the query that I will share in this article, we will identify the tables and indexes in RowStore mode that are not using data compression

It is worth remembering that until the 2016 version, this data compression feature was exclusive to the Enterprise version (besides Trial and Developer), so if your version is lower than 2016 and in the Standard edition, you will not be able to use data compression that I describe in this article.

Why use data compression

Data compression offers several benefits. It saves disk space and can help improve performance for certain workloads.

The benefits of data compression come at the cost of increased CPU usage to compress and decompress the data, so it's important to understand the workload characteristics on a table before deciding on a compression strategy.

Data compression provides flexibility in terms of compression levels (row or page) and the objects you can compress (table, index, partition). This allows you to fine-tune the compression based on your data and workload characteristics.

Another key advantage of data compression is that it works transparently to applications and works well with other SQL Server features such as TDE and backup compression.

Compressed pages are kept compressed on disk and remain compressed when read into memory. Data is uncompressed (not the entire page, but just the data values of interest) when it meets one of the following conditions:

- It is read to filter, sort, join, as part of a query response.

- It is updated by an application.

There is no uncompressed copy in memory of the compressed page. Decompressing data consumes CPU.

However, because compressed data uses fewer pages of data, it also saves:

- Physical I/O: Because physical I/O is expensive from a workload perspective, reduced physical I/O often results in savings greater than the additional CPU cost to compress and decompress the data. Note that physical I/O is saved because less data is read from or written to disk and more data can remain cached in buffer pool memory.

- Logical I/O (if data is in memory): Since logical I/O consumes CPU, reduced logical I/O can sometimes offset the CPU cost of compressing and decompressing data.

What is Row Compression Level

This compression feature takes into account the type of variable data structures that define a column. Row Compression Level is a compression level that does not use any compression algorithm.

The main purpose of Row Compression Level is to reduce the storage of fixed type data, i.e. when you are enabling Row type compression, you are just changing the physical storage format of the data that is associated with a data type.

Row Level Compression extends the vardecimal storage format (integer, decimal, float, datetime, money, etc.) by storing data of all fixed-length types in a variable-length storage format. This type of compression will remove any extra bytes in the fixed data type.

NULL and 0 values, in all data types, are optimized to not occupy any bytes.

For example, we have a CHAR(100) column, which is using the Row Compression Level. This column will only use the amount of storage defined by the data. Like this? Let's store the phrase “SQL Server 2008” in the column. This sentence only contains 15 characters and only those 15 characters are stored as opposed to the 100 that were defined by the column, so you save 85% on storage space.

What is Page Compression Level

In previous versions of SQL Server, each value was stored on the page, regardless of whether the same value already appeared in the same column for some other rows on a page. In SQL Server 2008, the redundant or duplicate value will be stored only once within the page and will be referenced in all other occurrences, thus we have the Page Compression Level.

Basically, Page Compression Level is a superset of ROW compression, and it takes into account redundant data in one or more rows on a given page. It also uses prefix and dictionary compression.

The Page Compression Level method is smarter as it allows common data to be shared across rows on a given page.

This type of compression uses the following techniques:

- ROW COMPRESSION: Already seen above.

- PREFIX COMPRESSION: For each column on a page duplicate prefixes are identified. These prefixes are stored in Information Compression (CI) headers, which reside after the page header. A reference number is assigned to these prefixes and this reference number is used wherever these prefixes are being used, decreasing the amount of bytes used.

- DICTIONARY COMPRESSION: Looks for duplicate values off the page and stores them in the CI. The main difference between Prefix Compression and Dictionary Compression is that Prefix is restricted to just one column whereas Dictionary is applicable for the complete page.

Once the Prefix Compression has completed, the Dictionary Compression is applied and searches for repeated values anywhere on the page and stores them in the CI area. Unlike Prefix Compression, Dictionary Compression is not constrained to a column and can replace repeated values, which occur anywhere on a page.

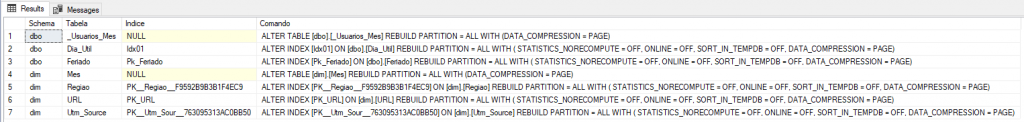

Script to identify tables and indexes without data compression

To identify tables and indexes without data compression and apply data compression, you can use the script below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

SELECT DISTINCT C.[name] AS [Schema], A.[name] AS Tabela, NULL AS Indice, 'ALTER TABLE [' + C.[name] + '].[' + A.[name] + '] REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE)' AS Comando FROM sys.tables A INNER JOIN sys.partitions B ON A.[object_id] = B.[object_id] INNER JOIN sys.schemas C ON A.[schema_id] = C.[schema_id] WHERE B.data_compression_desc = 'NONE' AND B.index_id = 0 -- HEAP AND A.[type] = 'U' UNION SELECT DISTINCT C.[name] AS [Schema], B.[name] AS Tabela, A.[name] AS Indice, 'ALTER INDEX [' + A.[name] + '] ON [' + C.[name] + '].[' + B.[name] + '] REBUILD PARTITION = ALL WITH ( STATISTICS_NORECOMPUTE = OFF, ONLINE = OFF, SORT_IN_TEMPDB = OFF, DATA_COMPRESSION = PAGE)' FROM sys.indexes A INNER JOIN sys.tables B ON A.[object_id] = B.[object_id] INNER JOIN sys.schemas C ON B.[schema_id] = C.[schema_id] INNER JOIN sys.partitions D ON A.[object_id] = D.[object_id] AND A.index_id = D.index_id WHERE D.data_compression_desc = 'NONE' AND D.index_id <> 0 AND A.[type] IN (1, 2) -- CLUSTERED e NONCLUSTERED (Rowstore) AND B.[type] = 'U' ORDER BY Tabela, Indice |

Page or Row data compression?

Microsoft's documentation (link here) gives us some tips to try to identify if the index should be created as page compression or row compression.

Run the script below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

SELECT [o].[name] AS [Table_Name], [x].[name] AS [Index_Name], [i].[partition_number] AS [Partition], [i].[index_id] AS [Index_ID], [x].[type_desc] AS [Index_Type], [i].[leaf_update_count] * 100.0 / ([i].[range_scan_count] + [i].[leaf_insert_count] + [i].[leaf_delete_count] + [i].[leaf_update_count] + [i].[leaf_page_merge_count] + [i].[singleton_lookup_count]) AS [Percent_Update], [i].[range_scan_count] * 100.0 / ([i].range_scan_count + [i].leaf_insert_count + [i].leaf_delete_count + [i].leaf_update_count + [i].leaf_page_merge_count + [i].singleton_lookup_count) AS [Percent_Scan] FROM [sys].dm_db_index_operational_stats(DB_ID(), NULL, NULL, NULL) AS [i] JOIN [sys].[objects] AS [o] ON [o].[object_id] = [i].[object_id] JOIN [sys].[indexes] AS [x] ON [x].[object_id] = [i].[object_id] AND [x].[index_id] = [i].[index_id] WHERE ([i].[range_scan_count] + [i].[leaf_insert_count] + [i].[leaf_delete_count] + [i].[leaf_update_count] + [i].[leaf_page_merge_count] + [i].[singleton_lookup_count]) <> 0 AND OBJECTPROPERTY([i].[object_id], 'IsUserTable') = 1 ORDER BY [Percent_Update] ASC; |

The smaller the value of the “Percent_Update” column or the larger the value of the “Percent_Scan” column, the better candidate this index is for using page compression.

Some information to help you decide:

- Page compression looks for repeating patterns, as we saw in its mode of operation. So if your data doesn't have repeating patterns, you won't get much extra benefit from page compression. You'll see some extra CPU usage, but you probably won't see much of a performance gain to be worth it.

- The CPU overhead of row compression is generally minimal (typically less than or equal to 10%). If row compression results in space savings and the system can accommodate a 10% increase in CPU usage, then all data should be row-compressed.

- On average, row compression takes 1.5 times the CPU time used to rebuild an index, while page compression takes 2-5 times the CPU time used to rebuild an index

- Exemplos de tabelas que são boas candidatas para compactação de página são tabelas de log ou auditoria, que são escritas uma vez e raramente lidas.

- Utilize compressão de página quando o objetivo for reduzir espaço em disco, pois a compressão de página geralmente irá comprimir mais do que a compressão por linha

- Para obter a compressão máxima disponível, utilize a compressão COLUMNSTORE_ARCHIVE, mas ela não possui boa performance de leitura.

And that's it, folks!

I hope you enjoyed this tip and until next time!